Will the project "Opinion Dynamics with AI" receive any funding from the Clearer Thinking Regranting program run by ClearerThinking.org?

Below, you can find some selected quotes from the public copy of the application. The text beneath each heading was written by the applicant. Alternatively, you can click here to see the entire public portion of their application.

In brief, why does the applicant think we should we fund this project?

The ability of AI systems to engage in goal-directed behavior is outpacing our ability to understand them. In particular, sufficiently advanced AIs with an internet connection could easily be used to influence e.g., public opinion via online discourse, as is already being done by humans in covert operations by political entities.

Influence on social networks has an important bottleneck: the main medium for information transmission to human recipients is language. Argumentation in language often exploits human cognitive biases. Much effort has been dedicated to develop ways of inoculating people from such biases. However, even after attenuating the effect of cognitive biases, argumentation remains a powerful tool for manipulation. The main threat to rational interpreters is evidence-based but manipulative argumentation, e.g., by selective fact picking, feigning uncertainty or abusing vagueness or ambiguity.

It is therefore of crucial importance at this stage to understand how argumentation can be used by malicious AIs to influence opinions, even those of pure rational interpreters, in a social network, as well as developing techniques to counteract such influence. Social networks must be made robust against influence by malicious argumentative AIs before these AIs can be applied effectively and at scale in a disruptive way.

Here's the mechanism by which the applicant expects their project will achieve positive outcomes.

ODyn-AI will proceed in stages. We will start by developing bespoke models of argumentative language use in a fragment of English. These models will be based on previous work by our group on language use in Bayesian reasoners (see below). Second, we will validate this model with data from behavioral experiments in humans. Our objective is to scale this initial model in two directions: first, from a single agent to a large network of agents, and second, from a hand-specified fragment of English to open-ended language production. We aim to achieve both of these objectives in the third step by fine-tuning Large Language Models to approximate our initial Bayesian agents. These LMM arguers are not yet malicious, in that they simply argue for what they believe. The fourth step will be to develop a malicious AI agent that sees the preferences / beliefs of all the other agents and selects the utterances that most (e.g., on average) shift opinions in the desired direction. The fifth and last section of ODyn-AI will focus on improving the robustness of the social network to these malicious AI arguers. We will train an AI to distinguish between malicious and non malicious arguers, and we develop strategies to limit the effects of malicious arguers on the whole network.

Our plan also includes dissemination of the results. We will use freely available material, in particular scientific papers in gold-standard open-access journals, blogs and professionally produced, short explanation videos, to summarize the key findings of this work.

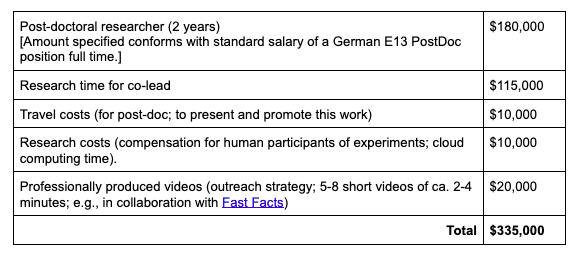

How much funding are they requesting?

$335,000

What would they do with the amount just specified?

Here you can review the entire public portion of the application (which contains a lot more information about the applicant and their project):

https://docs.google.com/document/d/1yyB8FchhlGnDpJDgtuuuS5UVqg2NtFWJ8kZUMTj83Ls